Why?

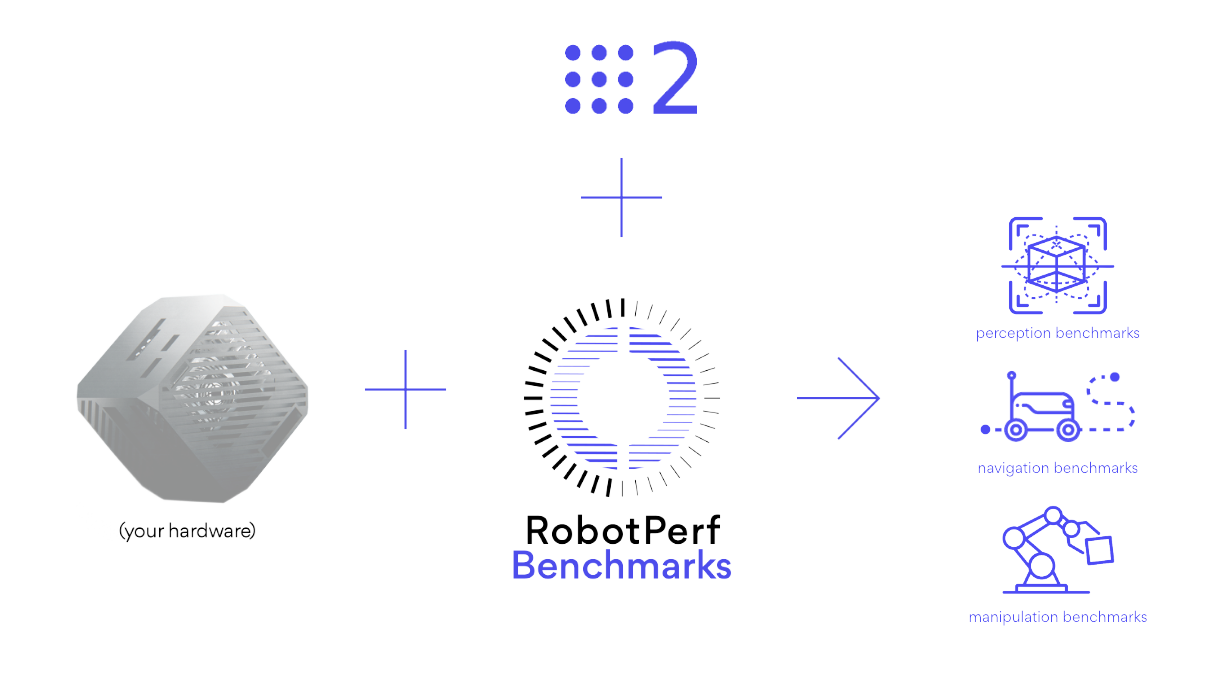

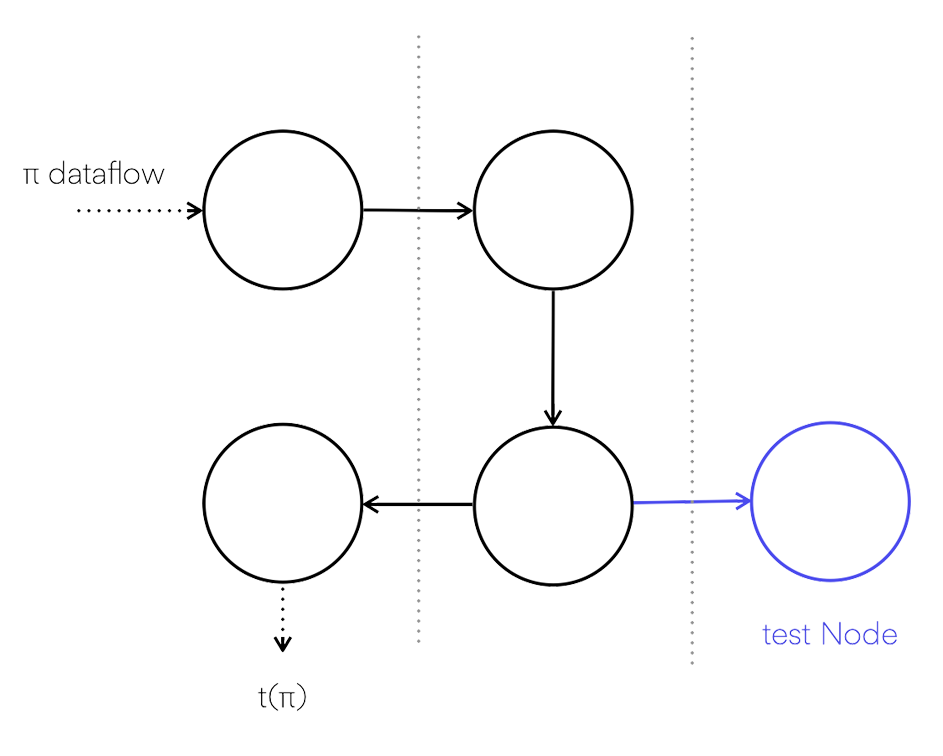

Robot behaviors take the form of computational graphs, with data flowing between computation

Nodes, across physical networks (communication buses) and while mapping to underlying sensors

and actuators. The popular choice to build these computational graphs for robots these days is

the Robot Operating System (ROS), a framework for robot application development. ROS enables

you to build computational graphs and create robot behaviors by providing libraries, a

communication infrastructure, drivers and tools to put it all together. Most companies building

real robots today use ROS or similar event-driven software frameworks. ROS is thereby the

common language in robotics, with several hundreds of companies and thousands of developers

using it everyday. ROS 2 was redesigned from the ground up to address some of the challenges in

ROS and solves many of the problems in building reliable robotics systems.

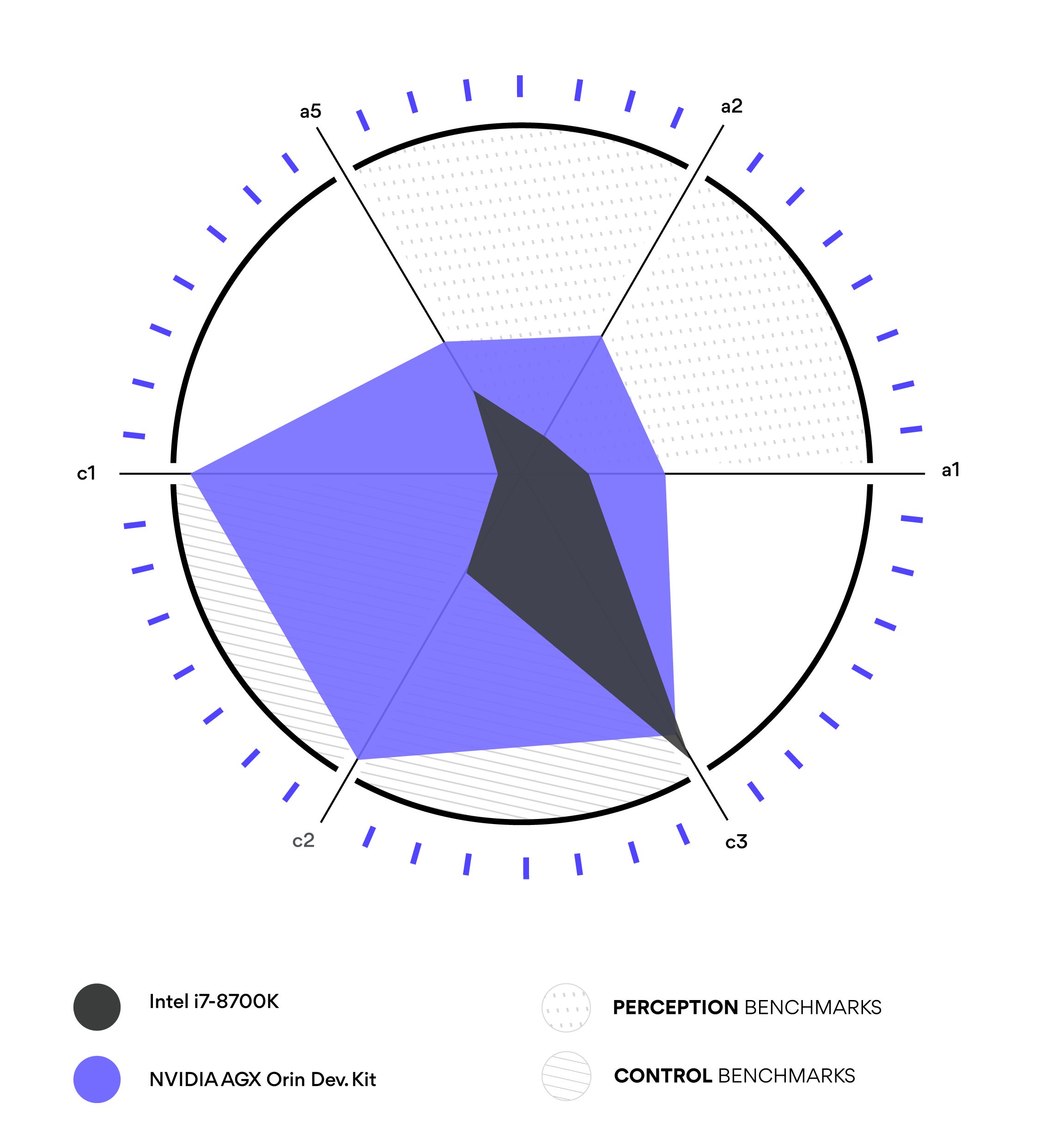

ROS 2 presents a modern and popular framework for robot application development most silicon

vendor solutions support, and with a variety of modular packages, each implementing a different

robotics feature that simplifies performance benchmarking in robotics.

Which companies are using ROS?

More about

ROS